From Early Experiments to Modern AI: The Evolution of Computer Vision

Computer vision is the branch of artificial intelligence that teaches machines to interpret and act on visual data.

It’s one of the most influential technologies shaping modern life, from detecting patterns in medical scans to helping cars drive themselves.

This article traces that journey.

We look back at the history of visual AI, the rise of machine learning, the deep learning revolution, and today’s computer vision applications before considering where computer vision might take us next.

First attempts at teaching computers to “see” simple patterns.

Computer vision kicked off in the same era as early AI research. Alan Turing had already posed the big question:

If humans can think and see, could machines learn to do the same?

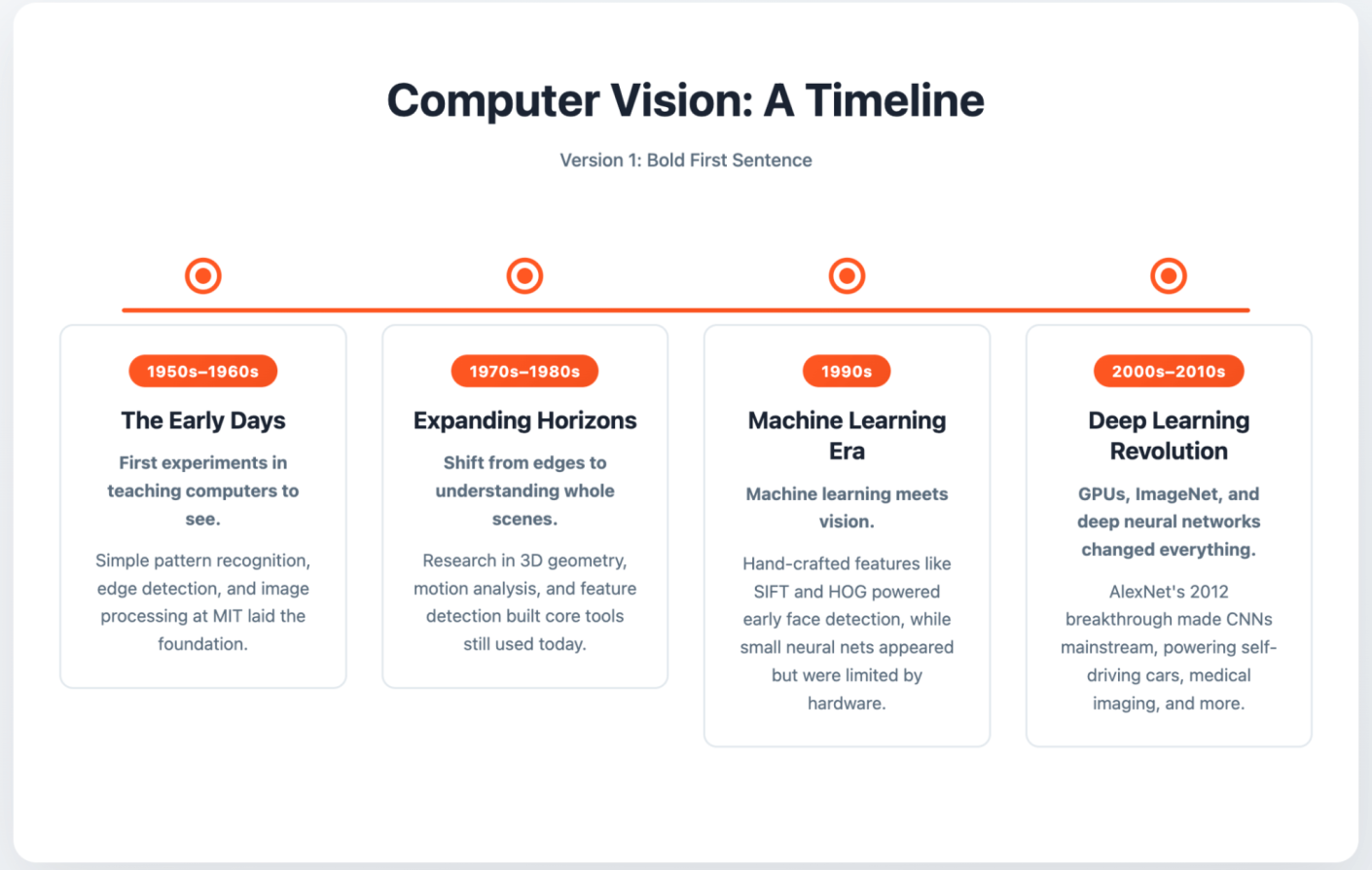

In the 1950s and ’60s, researchers poked at that question with the very first vision experiments.

The results were simple but groundbreaking for the time. Early systems could detect basic shapes or recognize patterns in black-and-white images.

At the Massachusetts Institute of Technology (MIT), researchers pushed things further with some of the first image processing experiments. The famous Summer Vision Project (1966) even aimed to make a computer describe what it saw in a scene – an ambitious goal at the time.

Techniques like edge detection (figuring out where one object ends and another begins) and basic object recognition showed that computers could interpret visual information.

These experimental milestones laid the foundation for what would later become a massive field.

By the ’70s, the field shifted gears from “what if” experiments to more practical goals. Instead of just spotting edges, researchers wanted computing resources to understand whole scenes.

Could a system distinguish between a person walking down the street and a car driving by?

This interest drove work on three-dimensional (3D) geometry, motion analysis, and reconstructing environments from multiple images.

Feature detection — finding and tracking meaningful points in an image — was another big win during this era. It became the building block of things like object detection and image matching.

The technology was still too early for everyday use. Yet, the toolkit that researchers built in these decades is recognizable in today’s computer vision playbook.

Hand-crafted features and early neural networks taught computers to recognize patterns from data.

In the ’90s, the field of computer vision started borrowing from machine learning. Instead of coding every possible rule, researchers taught computers to learn patterns from data.

Hand-crafted features like SIFT (Scale-Invariant Feature Transform) and HOG (Histogram of Oriented Gradients) became standard.

These methods helped computers recognize objects even when the lighting, angle, or size changes — which is how early software like face detection systems got off the ground.

Neural networks also made an appearance in this era. They were exciting, though limited by the hardware and datasets of the time. You could build small models, but scaling them wasn’t realistic yet.

This 20th-century period planted the seeds of the deep learning boom that came next.

Breakthroughs in GPUs, datasets, and deep neural networks unlocked modern computer vision.

Fast-forward to the 2000s, and everything changed. Graphics processing units (GPUs), originally built for gaming, suddenly gave researchers the power to train much bigger models.

At the same time, massive labeled datasets like ImageNet gave those models the data they needed to really learn.

This was the moment when convolutional neural networks (CNNs) stepped into the spotlight. The breakthrough came in 2012 with AlexNet, a CNN that crushed the ImageNet competition and dramatically cut error rates.

That result shocked the research community and is often seen as the turning point for modern AI.

A few key figures helped make this revolution possible:

Here’s LeCun showing the world’s first neural network back in 1993:

The work by these figures opened the door to real-world applications we now take for granted: everything from medical imaging and self-driving cars to Snapchat filters and face recognition.

Computer vision is a core technology shaping industries and daily life even today.

While human brains can only process so much at once, computer vision systems can analyze thousands of images per second, spot patterns invisible to the naked eye, and operate nonstop without fatigue.

That combination of precision and efficiency has opened the door to breakthroughs across many fields.

Consider player and ball tracking in sports as an example. In basketball and soccer, camera‐based systems like SportVU track the positions of every player and the ball many times per second, generating data on speed, distance moved, positioning, and interactions.

Here’s an overview of how it works:

This data is used by teams to analyze tactics, by broadcasters to show enhanced graphics, and by fans to see heat maps, movement trails and advanced metrics.

Let’s take a closer look at other areas where computer vision is making an impact today.

Computer vision lies at the heart of self-driving cars and intuitive driver-assistance systems (ADAS).

These AI-powered automotive systems use cameras (and often combine them with radar) to perceive the world, detect objects, understand lanes, predict motion, and avoid collisions.

By giving cars the ability to detect pedestrians, cyclists, traffic signs, and other vehicles in real time, computer vision directly improves road safety.

On top of preventing accidents, computer vision makes driving more efficient by helping vehicles maintain safe distances, anticipate sudden lane changes, and adapt to tricky conditions like poor lighting or weather.

On a broader scale, autonomy promises to reduce traffic congestion, open up mobility for people who can’t drive, and eventually reshape urban environments that are currently dominated by parking and road infrastructure.

Here are some key examples:

Here’s an example of Berry AI drive-through timer in action:

From autonomous vehicles to operational insights in drive-thrus, computer vision enables real-time understanding of environments. As a result, systems make smarter, faster, and safer decisions.

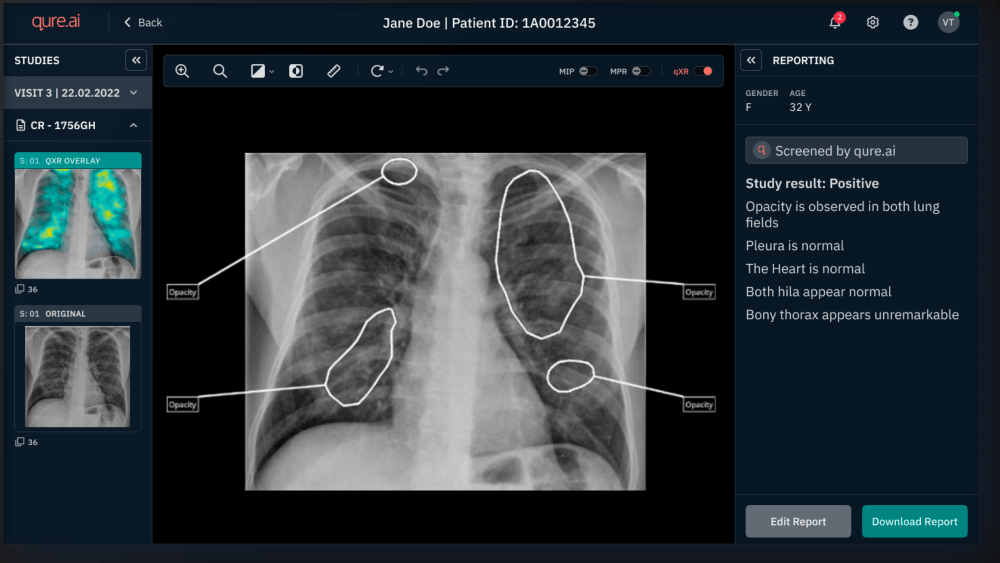

With patient data growing faster than clinicians can keep up, computer vision speeds up diagnosis, reduces diagnostic errors, and helps detect disease earlier.

The software analyzes huge volumes of medical data (like X-rays, CT scans, or MRIs) far faster than humans, so it’s easier to spot anomalies like tumors, fractures, or abnormal cell structures.

CV systems also standardize diagnosis, reducing the risk of human oversight or variation between doctors. By predicting how diseases progress and guiding treatment choices, the software also supports more personalized care.

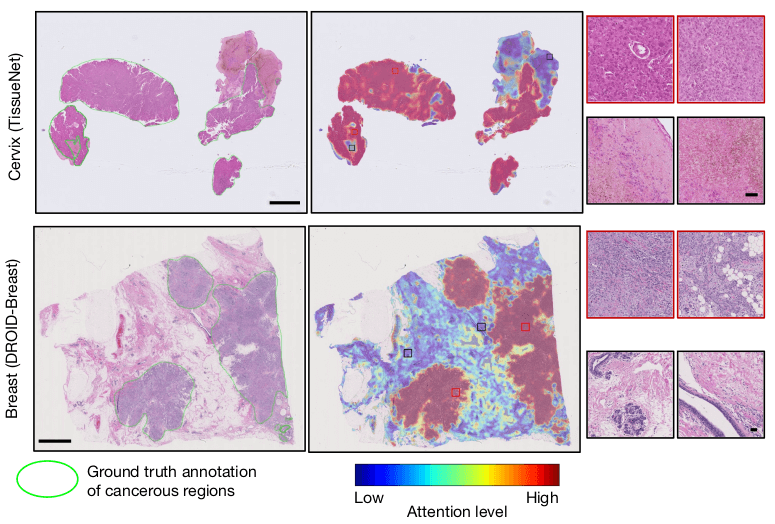

CHIEF (Clinical Histopathology Imaging Evaluation Foundation) is a good example of how AI computer programs enhance healthcare.

Trained on millions of images, CHIEF can detect cancer cells, predict molecular tumor profiles, assess the tumor microenvironment, and forecast patient survival. The software outperformed many existing models across multiple cancer types.

Here’s an example of CHIEF in operation:

Beyond diagnostics, computer vision is also transforming medical robotics.

Robotic surgery systems use real-time image recognition to enhance precision during complex procedures, allowing surgeons to operate with smaller incisions and reduced risk.

Assistive robots guided by vision also help monitor patients, deliver medication, or support rehabilitation.

Ultimately, computer vision lightens the workload of overburdened medical staff while improving the quality of patient care.

As more of our lives move online and into digital systems, secure and reliable identity verification is critical.

Vision-based biometrics offer a balance of convenience and security that traditional methods (like passwords or physical keys) can’t match.

By recognizing unique features like faces, fingerprints, or irises, they make identity harder to forge and help secure sensitive spaces — from smartphones to border crossings.

Beyond authentication, visual AI computer systems enhance surveillance, support law enforcement in locating missing persons or suspects, and allow for smoother and safer access control in airports, workplaces, and high-security facilities.

A recent iris recognition platform by Fingerprint Cards can identify people with long capture distances (“just glance” approaches). The software has very low false-acceptance rates (one in a million).

While these expert systems provide clear benefits, they also raise important challenges and require fine-tuning. Privacy concerns, algorithmic bias, and errors such as false matches can have serious consequences when applied at scale.

Questions of consent, secure data storage, and legal safeguards continue to shape the debate about how biometrics should be used responsibly for everyone’s safety. We’ll explore ethical future applications of computer vision in our next section.

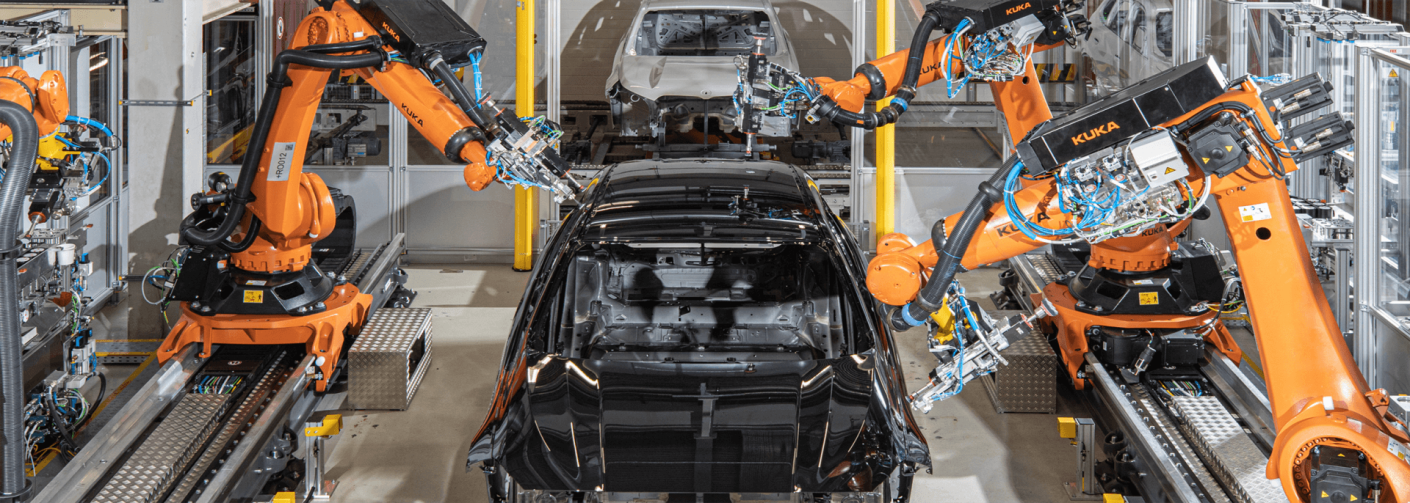

Modern manufacturing and logistics depend on speed, flexibility, and quality. Computer vision lets robots handle products of different shapes and orientations, which lowers the need for rigid, expensive tooling.

These features make factories more adaptable to changes in product design or customer demand. At the same time, vision-based inspection systems catch defects early, improving quality control and reducing waste.

Let’s take a look at some common examples:

Car manufacturer BMW (as well as many other car brands) uses robotics for automated assembly and inspections — referring to it as automated surface processing.

By combining precision with adaptability, industrial robotics powered by vision boost productivity, cut costs, and make global supply chains more resilient.

Computer vision has come a long way from detecting edges in grainy images. Today, computing powers cars, diagnoses diseases, and secures identities.

The next wave of innovation is about combining vision with other AI capabilities, tackling ethical concerns head-on, and embedding vision systems into everyday environments like cities, schools, and workplaces.

Before we delve into future advancements, here’s a quick preview of computer vision best practices we will explore in detail below:

| Area | Best Practice |

|---|---|

| Governance | Define clear AI policies, consent frameworks, and accountability measures for computer vision deployments. Why it matters: Ensures responsible use, builds trust with users, and aligns with regulations like the EU AI Act. |

| Ethics | Audit datasets for bias, ensure diversity, and implement privacy-preserving methods. Why it matters: Reduces harmful outcomes, prevents discriminatory results, protects sensitive data, and supports fair, trustworthy AI. |

| Operations | Continuously monitor models, retrain with updated data, and integrate multimodal AI responsibly. Why it matters: Keeps performance reliable as real-world conditions change, enables accurate vision and language interactions, and supports applications from AR/VR to smart cities. |

| Environment | Optimize algorithm efficiency, use greener data centers, and track environmental impact. Why it matters: Minimizes energy use and resource consumption, making AI sustainable while powering large-scale vision applications like autonomous vehicles and urban monitoring. |

These practices provide a foundation for understanding the future directions and applications of computer vision.

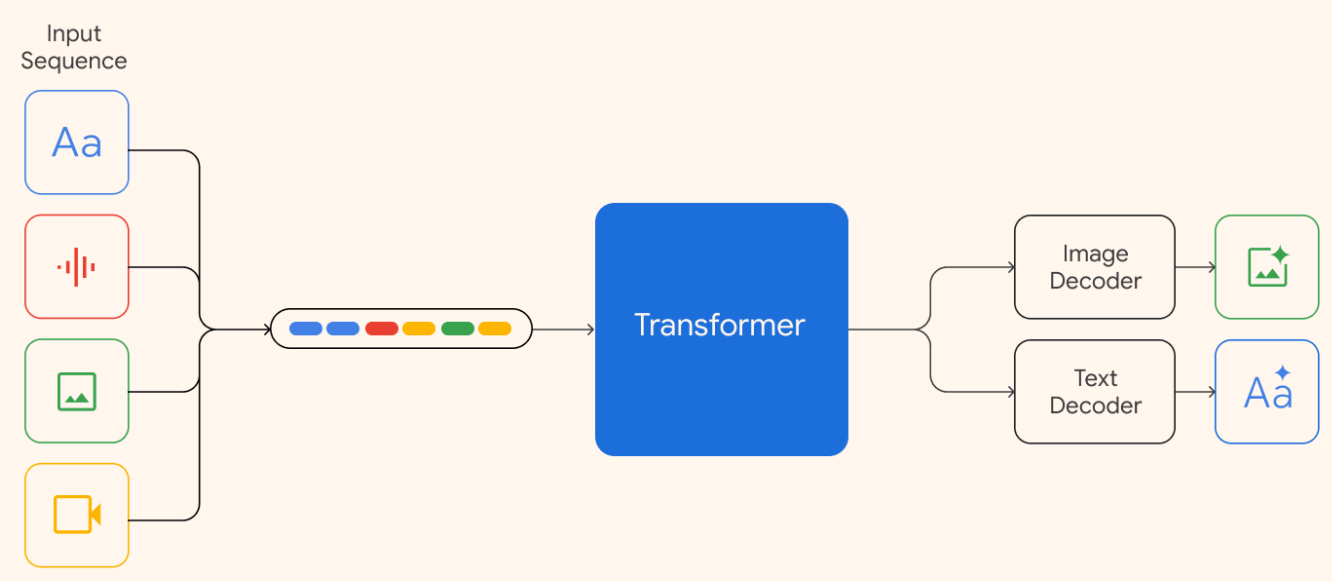

Pairing computer vision with natural language processing (NLP) allows machines to connect what they see with what we say or write. This process is known as multimodal AI.

Note: Multimodal AI can understand and combine information from different data types (think images, text, and audio) to perform computer vision tasks or answer questions more effectively. Combining these systems makes AI more natural and useful.

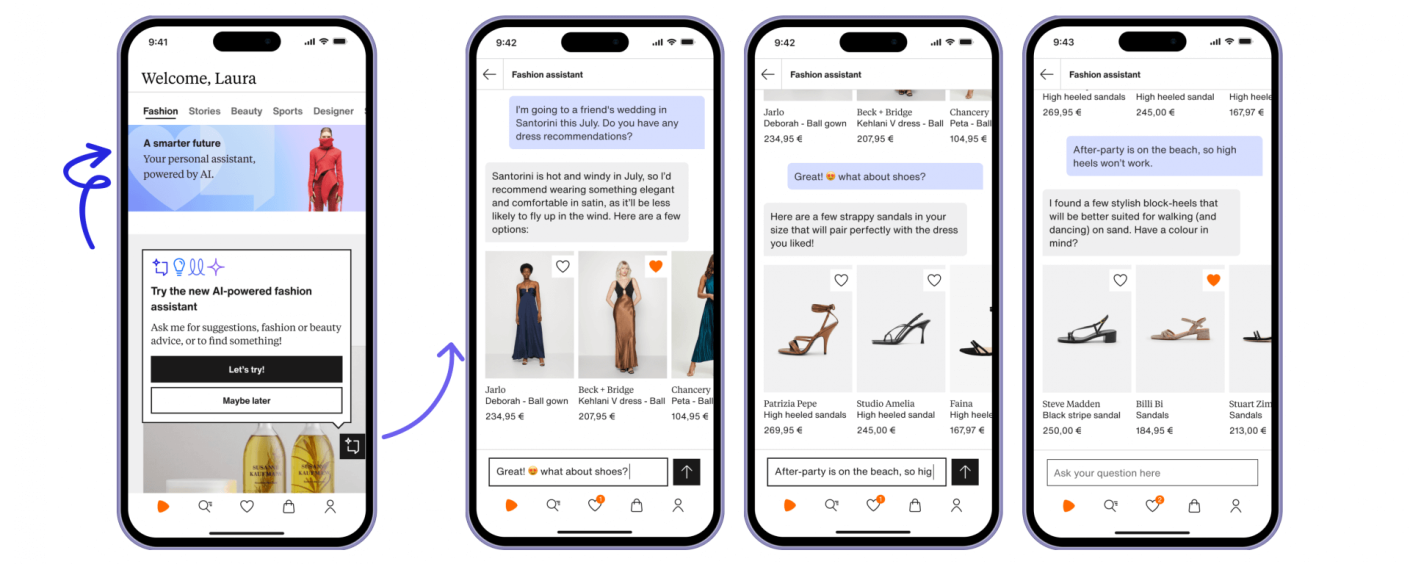

Imagine pointing your phone’s camera at a dish in a restaurant and instantly getting a recipe. Or snapping a photo of a product and asking an AI assistant to compare prices, reviews, and get personalized recommendations:

Models like OpenAI’s ChatGPT-5 with vision and Google’s Gemini are also pushing this integration, allowing richer interactions that feel more human.

Here’s a breakdown of how Gemini does it:

As this technology develops, we’ll see smoother customer journeys, faster knowledge discovery, and more personalized digital experiences.

The rapid spread of computer vision has sparked big debates. How do we make sure these systems are fair, private, and trustworthy?

Concerns about bias in facial recognition, invasive surveillance, and mishandled data are very real.

Joy Buolamwini, the founder of the Algorithmic Justice League, comments on AI bias in facial recognition:

I would look into the data sets and I would go through and count: how many light-skinned people? How many dark-skinned people? How many women, how many men, and so forth. And some of the really important data sets in our field. They could be 70% men, over 80% lighter-skinned individuals. And these sorts of datasets could be considered gold standards.

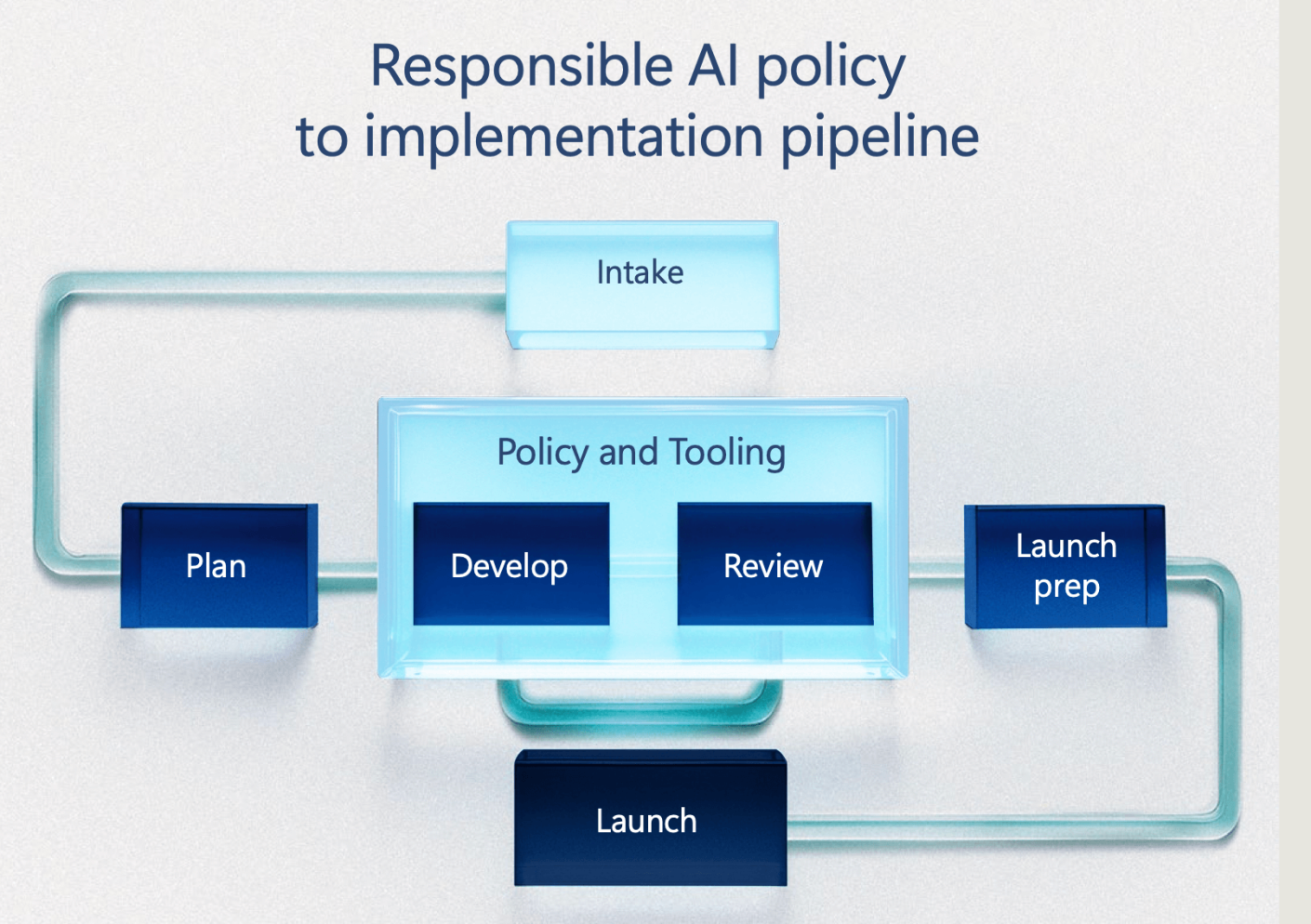

We understand that these concerns are real, but there are a lot of positives and systems doing things right and ethically. Innovators and regulators are working on solutions rather than ignoring the problems.

Trust is essential for computer vision to be widely accepted, which is why companies are building safeguards into their systems from the start to reassure users and the wider public.

For example, organizations are improving data quality by ensuring training data is diverse and representative to prevent skewed outcomes:

MIT researchers developed a technique that identifies and removes specific data points contributing to model failures on minority subgroups, enhancing fairness without compromising accuracy.

National institutions and business leaders around the world also play an important safeguarding role:

Here’s a breakdown of Microsoft’s AI policy implementation process to minimize risk:

Ongoing research is expanding computer vision beyond detection to reasoning about the visual world.

Organizations like Princeton University are developing systems that combine computer vision, machine learning, human-computer interaction, and cognitive data science.

They focus on how AI can:

At the same time, they’re prioritizing fairness, accountability, and transparency. This research ensures that future vision systems are not only more capable, but also ethical, fair, and adaptable across diverse populations.

Environmental concerns are also worth noting.

Data centers powering AI consume massive amounts of energy and water, rely on rare minerals, and generate electronic waste. All of these activities contribute to greenhouse gas emissions and resource depletion – and corporations like Google are already doing something to mitigate the impact.

UNEP emphasizes the need for sustainable AI practices, including measuring environmental footprints, improving algorithm efficiency, greening data centers, and integrating AI policies into broader environmental strategies to ensure AI benefits outweigh its costs.

Computer vision is powering entirely new experiences in the physical and digital worlds. Augmented reality (AR) and virtual reality (VR) rely heavily on real-time vision to track movements, overlay digital objects, and create lifelike environments.

Retail brands are already using AR to let customers “try on” clothes or visualize furniture in their living rooms:

In entertainment, VR headsets combined with vision-based hand tracking allow more immersive and interactive games:

Beyond consumer applications, the impact of computer vision is huge in infrastructure and urban life.

Smart cities, for example, use vision systems to monitor traffic flow, reduce congestion, and improve pedestrian safety. During emergencies, the tech can detect hazards or guide evacuation routes.

Take a look at Singapore. The Agency for Science, Technology and Research (A*Star) has created an autonomous fleet to help the city’s elderly and disabled residents stay mobile.

At the same time, students at the National University of Singapore can be ferried around campus on a self-driving shuttle:

In construction and architecture, vision combined with VR is creating accurate virtual spaces before any building takes place. This technology is saving money and improving collaboration across teams worldwide.

In a case study on VR in construction, Kyle E. Haggard, Project Manager at DPR Construction, says:

[VR] has the potential to exponentially increase the integrity of a project from the time, cost, and quality standpoints.

By using VR and vision-based modeling, project teams can identify design conflicts, optimize workflows, and coordinate multiple disciplines before breaking ground.

The technology also enables immersive walkthroughs for clients, helping them visualize the final space and provide feedback while adjustments are still easy and inexpensive.

The path forward is clear: balance breakthroughs with responsibility, and computer vision will continue to transform society in ways that benefit everyone.

The “father of computer vision” attribution is sometimes debated.

Larry Roberts is often credited as an early founder for his groundbreaking 1963 MIT thesis on machine perception of three-dimensional objects.

Azriel Rosenfeld is likewise esteemed for pioneering research in digital image processing, pattern recognition, and early computer vision algorithms during the 1960s and ’70s, laying the groundwork for how machines analyze visual systems.

Kunihiko Fukushima is also celebrated for developing the Neocognitron in the late ’70s, an early artificial neural network model that anticipated modern deep learning techniques to vision.

The three Rs are recognition, reconstruction, and recovery:

Early computer vision focused on simple tasks like detecting edges, recognizing basic shapes, and interpreting black-and-white patterns. Systems could perform feature extraction and identify objects in controlled settings, but were limited in complexity.

Research at MIT and other institutions explored image classification and processing, pattern detection, and early object recognition, laying the foundation for modern vision systems.

Computer vision has evolved from detecting edges in grainy images to driving cars, diagnosing disease, and interpreting the world at scale.

No longer a lab experiment, it’s now a foundation for smarter interactions and richer customer experiences.

At Apizee, we know how important it is to align with cutting-edge AI trends. We’re attuned to the evolution of visual AI and thoughtfully leverage its potential to enhance efficiency and experiences without compromising human judgment.

Discover how Apizee can help your team deliver faster, smarter, and more personalized customer service through visual engagement.

Get a demoExplore key customer experience statistics for 2026, highlighting where customer expectations are rising and where CX investments pay off.

40+ Customer Experience Statistics You Need to Know for 2026

7 Jan 2026

Explore the top customer service trends for 2026—a quick look at what’s shaping customer expectations and behaviors in the year ahead.

Customer service: trends not to miss in 2026

8 Dec 2025

Here is the list of best Call Center Conferences to attend in 2026. Check out the top events, summits and meetups globally that you can plan for this year.

List of Best Call Center Conferences to Attend in 2026

1 Dec 2025

Interested in our solutions?